Exascale computing and Alice Recoque at TGCC

The Jules Verne Consortium on the road to exascale

The Jules Verne consortium brings together France, represented by the Grand Equipement National de Calcul Intensif (GENCI) as the hosting entity, associated with the CEA as the hosting site, the Netherlands, represented by SURF (which encompasses the Dutch national high-performance computing centre), and Greece, represented by GRNET (which encompasses the Greek national high-performance computing centre).

The main objective of this consortium is to provide the French and European academic community with a world-class supercomputer with a capacity exceeding 1 exaflop/s (1018 operations per second in double precision), based on European hardware and software technologies. This machine will enable us to respond to major societal and scientific challenges through the convergence of numerical simulations, big data analysis and artificial intelligence.

In June 2023, the European High Performance Computing Joint Undertaking (EuroHPC JU) selected the Jules Verne consortium to host & operate in France, at TGCC, the 2nd EuroHPC exascale supercomputer, to exceed the threshold of one billion billion calculations per second.

EuroHPC procurement

In September 2024, the EuroHPC Joint Undertaking (EuroHPC JU) launched a call for tender to select a vendor for the manufacturing, delivery, installation and maintenance of Alice Recoque, the European exascale supercomputer to be located in France, at the CEA DAM Ile-de-France site, within the TGCC (CEA's Very Large Computing Centre).

Named Alice Recoque, in tribute to a French computer scientist who was a pioneer of the modern era and one of the first female engineers in artificial intelligence. Alice Recoque will replace GENCI's Joliot-Curie supercomputer.

On 18 November 2025, the EuroHPC Joint Undertaking (EuroHPC JU) and the Jules Verne consortium (GENCI, CEA, SURF, GRNET) have announced that the proposal from Eviden (Bull), a leader in advanced computing and AI, has been selected for the new European exascale system Alice Recoque. The solution is based on AMD hardware for accelerated computing and a processor from SiPearl, a European designer of CPUs for HPC. The Alice Recoque supercomputer will thus promote sovereign access to HPC, AI and quantum computing for science and innovation.

Alice Recoque supercomputer

Alice Recoque will draw its power from the new generation AMD Instinct™ MI430X GPUs designed for sovereign AI and scientific applications. These GPUs will be fed by AMD EPYC Venice processors. With 432 GB of HBM4 memory and 19.6 TB/s of memory bandwidth, the AMD Instinct MI430X GPU will enable Alice Recoque to rank among the most powerful and energy-efficient scientific instruments ever built. Application porting will be facilitated by the GPUs' large memory capacity and unified memory between CPU and GPU.

For scalar applications, it will be possible to use part of the CPU cores transparently, as well as a dedicated partition based on SiPEARL Rhea2 processors.

The whole system will be integrated into BullSequana XH3500 computing racks and interconnected by the new European high-performance network system Eviden BXI v3, enabling speeds of 800 Gbit/s between GPUs and 400 Gbit/s between CPUs.

TGCC adaptation works

In anticipation of the installation of Alice Recoque, the CEA has undertaken to adapt and upgrade the TGCC's infrastructure in 2024 and 2025 to meet the needs of new generations computing. This work included:

- Reinforcement of the concrete slab to increase its load capacity to 2,800 kg/m² over an area of 700 m²;

- Increasing the available electrical power by 24 MW and increasing the capacity of the inverters (~10%);

- Creating a dedicated 20 MW warm water cooling loop for direct liquid cooling to increase energy efficiency;

- Adapting the current cooling system for technical rooms, warehouse cooling and air cooling;

- Preparing the annex computer room and supercomputer room.

Machine room refurbished (raised floor temporarily removed). Bull will first deploy electric power and cooling interfaces and networks, then will set the raised floor.

New IT room, for storage robotics and various IT peripherals.

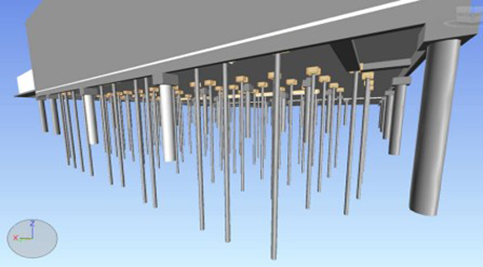

Drilling and new piles set up deep in the ground to support the compute room concrete floor.

67 piles in the clay ground sustain a metallic reinforcement frame for machine room floor.

Electric distribution modules.

New transformers, behind a reinforcement pile.

New cooling towers – 20 MW capacity.

New warm water loop and cooling towers.